Available server types

With our SQL integration, you can display anything that can be expressed by SQL. Plecto supports the following SQL server types:

MySQL

PostgreSQL

Microsoft SQL Server 2005 or later (including Azure SQL)

Oracle

Snowflake

BigQuery*

*server-specific configuration process

Technical details

Update frequency: Custom (from every 1 minute to once a day)

Supports custom fields? Yes

Supports deleted registrations? No

Update frequencies

Our system will automatically increase the SQL update frequency of a data source, depending on time spent importing from your server. Examples:

If the update frequency is set to 1 minute, but the import takes between 30 - 120 seconds, Plecto will adjust the update frequency to 5 minutes.

If the update frequency is set to 1 or 5 minutes, but the import takes between 120 - 180 seconds, Plecto will adjust the update frequency to 15 minutes.

If the update frequency is set to 1, 5, or 15 minutes, but the import takes between 180 - 360 seconds, Plecto will adjust the update frequency to 1 hour.

Step 1: Configure your firewall

Before you try to import data from your SQL server, please make sure to configure your firewall to only allow traffic from the following source:

51.159.130.44

On June 28-29, 2025, Plecto performed a migration to a European-based hosting provider, and therefore the IP address listed above is replacing the old Plecto IPs. The address above is replacing the old IPs used for traffic, so please make sure to update your configuration if you have not yet done so.

The following Plecto IP addresses are getting deprecated after June 28, 2025:

54.76.22.100

54.76.22.43

52.210.249.227

Step 2: Connect your SQL server to Plecto

We recommend that you create a new username and password that are specifically intended for connecting your database to Plecto. This way, you will avoid sharing the same password across databases. It will also allow you to limit permission for the created user to only have read access.

Go to Data sources > New data source and choose your database.

Fill out the credentials. If you have access to the database, you should be able to gather your credentials.

Note on Hostname: You need to provide a public IP. If you add a local IP, the connection will not work. Learn more.

Note on Username when connecting with Azure: If you are using SQL server on Azure, you should add username@[hostname] in the username field. For example, if the hostname is plecto.database.windows.net, then you should write username@plecto.database.windows.net in the username field.

If you want to use the SSH Tunnel: SSH allows you to transport data over a secure and encrypted connection. Please contact your organization's technical staff if you want to connect your database to Plecto using an SSH tunnel but don't know how.

Click Continue and move to Step 3 where you'll have to write an SQL statement.

Step 3: Write an SQL statement

Once you connect Plecto to the database, you need to write a SELECT statement where you include the following standard fields:

member_id (required to create the user, if ID is not found)

member_name (required to map data to members in Plecto)

date (required date associated with this record (always in UTC!))

reference (required unique identifier; can be used to update registrations)

team_name (optional; we will upsert it in the Plecto data source)

How to limit the SQL statement

With each import, Plecto will import and update all data, including the rows that have already been imported but have not changed since the last import run. Therefore, be mindful of how much data you actually need to import – the more optimal your setup, the faster Plecto will be able to process your data.

To speed up the updates and have a lower load on your servers, please limit your statement so that it only returns the latest changed data. You can use the variable {{ last_update_time }} to filter for items that did not get imported since the last run.

If you have a date field, you can add the following function to the clause, and the variable will be replaced with the latest date and time when the data source was updated:

date field >= {{ last_update_time }}You can also add an upper date limit that will keep Plecto from importing data newer than the indicated limit. Use the following function:

date field <= {{ upper_date_limit }}You can also use a top 100 in the SELECT function. Remember to order DESC (descending) to select the newest records.

last_update_time and upper_date_limit

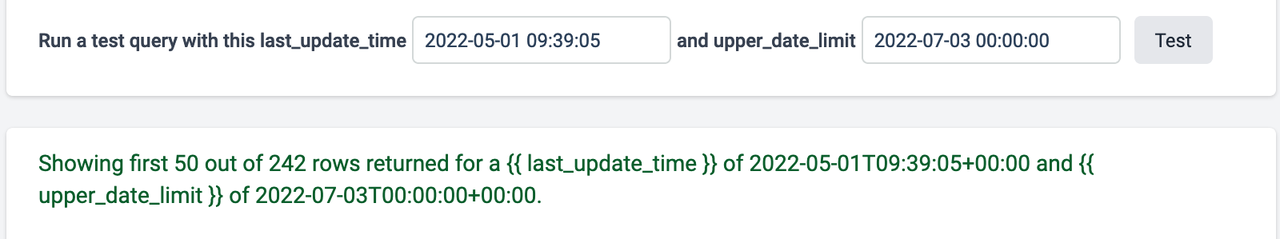

When you write an SQL statement, you can add a start date (last_update_time) and end date (upper_date_limit) that will make your database only import data from within that period.

You can run a test query to see how many rows there are during the indicated period.

Member ID Source

Member ID Source tells Plecto which system is the data supplier. The Member ID Source should be the same system name you see in the Data suppliers column under Settings > Employees. Both Member ID Source and Data suppliers refer to the same system.

If both places have different system names, Plecto will create new employees along with each data import, which might result in duplicate employee profiles. Therefore, we recommend using the same Member ID Source name as is the name of your data supplier.

SQL data import

The query: Write an SQL statement that returns a number of rows, each corresponding to a registration in our system. Each row can have any number of columns that will be saved on the registration.

Historical data: Plecto can import historical data once. Then we keep the data up to date by importing every [x] minutes, but only the rows that have changed.

Import logs: You can see all the import logs, both automatic and manual, by clicking the View logs button in the top-right corner in the data source settings.

New data: With each import, Plecto will import all data. If you only want to see the new/updated data, you can limit your SQL statement (read below). By limiting the statement, Plecto will only import rows that have changed, for example, within the last 5 minutes.

Raw data: Plecto works best if you import raw data. That means one row should represent one item. Do not import already computed metrics. Plecto can calculate these metrics from the raw data using formulas.

Registration import limit on SQL

Plecto limits the number of registrations that can be imported per query based on the update frequency.

Update frequency | Max. number of registrations |

|---|---|

1 min | 1000 |

5 min | 4000 |

15 min | 10 000 |

30 min | 16 000 |

1 hour | 20 000 |

2 hours | 30 000 |

4 hours | 40 000 |

8 hours | 60 000 |

1 day | 100 000 |

Manual data import

Starting July 2022, our SQL integrations support manual data import.

Open the data source settings and click Import historical data.

Choose the start and end dates and click Start import.

Keep in mind that if you want to import lots of historical data, you should split data import into multiple sessions. If you import a lot of old data at once, the database might not be able to complete the import.